Hit the Goalie / With the Puck / In

the Neck

Micah Beck

University of Tennessee

24 March 2025

The

language of formal reasoning is often used in applied Computer Science to

characterize the properties of engineered systems. In a recent article I described how the

Internet’s Transport Control (TCP) Protocol is typically described as

“reliable” when in fact it only reduces

the occurrence of certain kinds of error. Treating TCP as if it were completely

reliable simplifies network programming by ignoring the consequences of error.

This is just one example of the widespread use of the reassuring but misleading

formal language.

Any

formal proof tells us that, if its assumptions are valid, and the rules of the

logic are sound, then its consequences will hold. Since

any engineered system will differ from the formal model on which such a proof

is based, direct application of its predictions in the real world is

necessarily fallacious. To understand the fallacy, we must find which element

of the proof fails.

Some

striking examples of such fallacies stem from proofs of

fault tolerance. It is a well-known formal result that a reliable

distributed system cannot be implemented using one that has no limits on the

number and type of communication errors that can occur. There is a whole

category of fault tolerant algorithms that make the reasonable-seeming

assumption that there is a fixed bound on the number of errors that will occur

during its execution. This may be justified by pointing out an assumption

commonly made in probabilistic reasoning, namely the independence of errors. If

errors are independent, then the probability of there being more than some

(possibly large) number of errors N during the execution of an algorithm will

be very small.

The

problem with algorithms based on such bounds is that they fail when more than

the assumed limit of N errors occurs. This can happen when the occurrence of

errors is not in fact independent due to any of a number of real-world

considerations. These include common software (such as Linux) used in many

computer systems, hardware design flaws and the presence of malicious actors.

The formal proof provides a roadmap of assumptions some of which the real world

will violate.

Lest

the reader think that this criticism is purely academic, I point out that the

widely used distributed consensus algorithm used in implementing blockchain has several vulnerable assumptions,

including:

● 51% Attack (a victim node in

overwhelmed by malicious neighbors)

● Integer Overflow (the victim

ignores an unexpected error mode)

● Routing Attacks (messages are

maliciously delayed, misdirected or dropped)

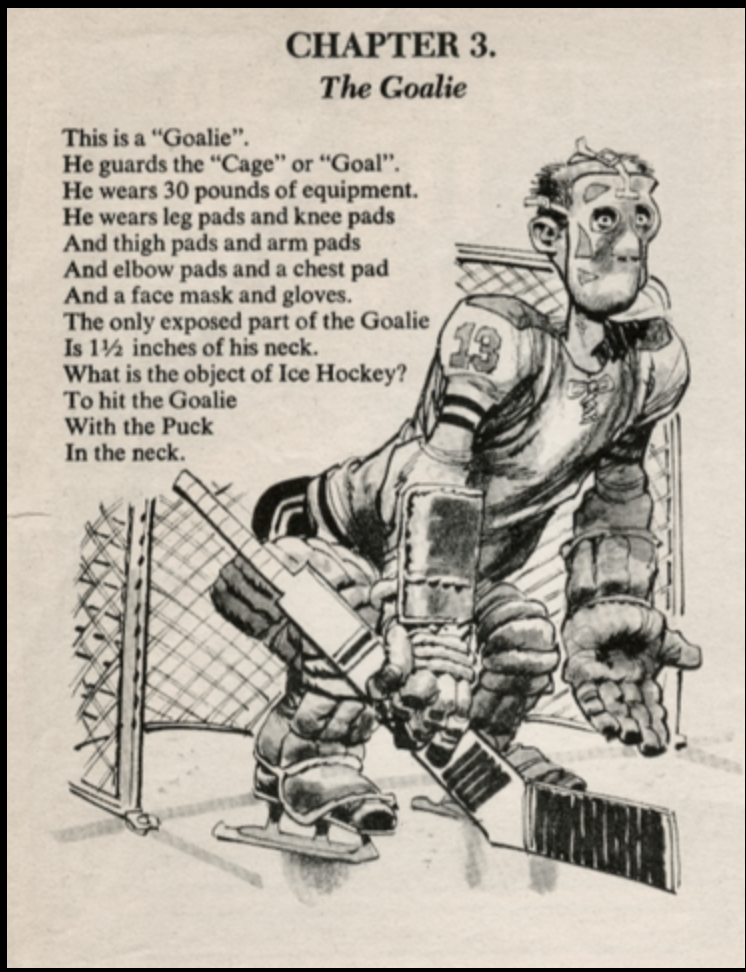

Consider

an analogy. Ice hockey is a dangerous game due in part to the use of a dense,

fast-moving puck. It is important that the organizers of the game be seen to

protect the players. A Mad magazine

hockey primer described the padding which protects every inch of the goalie’s

body except “1½ inches of his neck”. It then goes on to explain:

“What is the object of the game of Ice Hockey?

To hit the Goalie

With the Puck

In the neck”

In

any application of a formal proof to the real world, unrealistic assumptions

are the gaps which leave the neck of the application exposed to attack.

The

Goalie, The Mad Ice Hockey Primer, Mad Magazine #125 March,

1969.

Written by Larry Siegel. Illustrated by Jack Davis

https://hockeygods.com/system/gallery_images/22886/original.png?1727806481

Another

example of the use of formal language to mischaracterize practical systems

concerns deadlock in concurrency control. Operating system textbooks explain to

students the proof that there are four conditions which must hold for a system to be in

deadlock.

● Mutual Exclusion (a lock can

be held by at most one process at a time)

● Hold-and-Wait (a process

which has exclusive use of one resource can wait for access to another)

● Non-revocation (access to a

resource, once granted, cannot be rescinded)

● Circular waiting (a cycle

exists in the graph of waiting processes)

The

processes involved in circular waiting will never stop waiting and so can never

make any progress.

The

problem with this proof is that there are almost no practical systems where

non-revocation actually holds. If a deadlock does occur in a real system, and

it becomes partially or completely unresponsive, the universal solution

(perfected by the users of Microsoft Windows) is to turn it off and then back

on again. This has the effect of revoking all locks and regenerating all

processes. In POSIX-like operating systems a deadlock can usually be broken by

the receipt of a signal which wakes a waiting process.

The

reality of deadlock is that it is a condition that holds as long as the four

conditions listed all apply. During that time, no progress will be made by the

circularly waiting processes. Temporary deadlock can be used in the correct

design of a system when the factors that can end (by revoking a lock or killing

a process) are part of that design. This fact greatly complicates the analysis

of deadlock and is not generally taught. Instead, we teach a simple proof based

on a model of process management which predates interactive computing. Because

we like to teach students about proofs, and it makes the field of operating

systems seem more rigorous.

The

misuse of formal language so permeates applied Computer Science that it is

impossible to convince practitioners to speak in more realistic terms. Examples

of reality-based observations include:

● No archive is permanent. Even

a highly durable storage medium is connected through interfaces and protocols

with limited lifetimes (some as short as 5 years).

● Computation is not accurate.

Every wire, gate and storage cell has a non-zero

probability of error, and software is much less stable.

● No system is secure. Every

physical behavior leaks information through some side channel, and every

program makes an assumption or has a logic error that can be a vulnerability.

In all

such cases, application developers are trained to make assumptions which

contradict the truth, and which result in unanalyzed vulnerabilities to error

and malicious exploitation.