The field of

Computer Systems Architecture has always been fueled by the need to harness the

power of physical processes at scale. Before the advent of electronic digital

computing, many pioneers worked with other physical phenomena: in the late 19th

Century Charles Sander Peirce studied geography and

the behavior or pendulums (geodesy); at the

turn of the 20th century analog tide prediction machines were developed by Sir WIlliam

Thompson (who became Lord Kelvin); in 1928 Vannevar Bush designed an analog computer that could

approximately solve an arbitrary sixth-order differential equation.

The power of digital computing lies in an approximate truth:

- the characteristics of computational systems can be discretized into

a finite number of distinct categories which can be used to represent

symbols or digits (e.g. 0 and 1, decimal digits or alphanumeric

characters) and

- deterministic operations can be

defined on these symbols to produce predictable results that comprise the

basis of computation (e.g. and, or, not, addition, comparison).

The digital overlay

on analog circuits provides a means by which human designers can limit the

variety of phenomena that might be used to automate computation. John von Neumann commented on the fact that wind tunnels, essentially

specialized analog computers, would be replaced by digital simulation. However the digital overlay also places limitations on our

ability to make use of some very powerful operations, such as

near-instantaneous multiplication, that are possible in analog circuits. Today, neuromorphic computing represents a hybrid

form of analog and digital computing.

The software toolkit

of system developers, including operating systems, compilers and networks, all

developed as means of gaining control over and increasing the convenience of

using digital systems at scale. Each of them is based in some way on the

adoption of a model of use that is different from the underlying properties of

the physical system, and in many cases by the acceptance of probabilistic (as

opposed to deterministic) correctness. They create a useful illusion, or in

more technical language, a virtual world.

Early computers were

single-user devices, and operating systems were developed to assist in the

tedious task of going from an “empty” boot configuration to one that could run

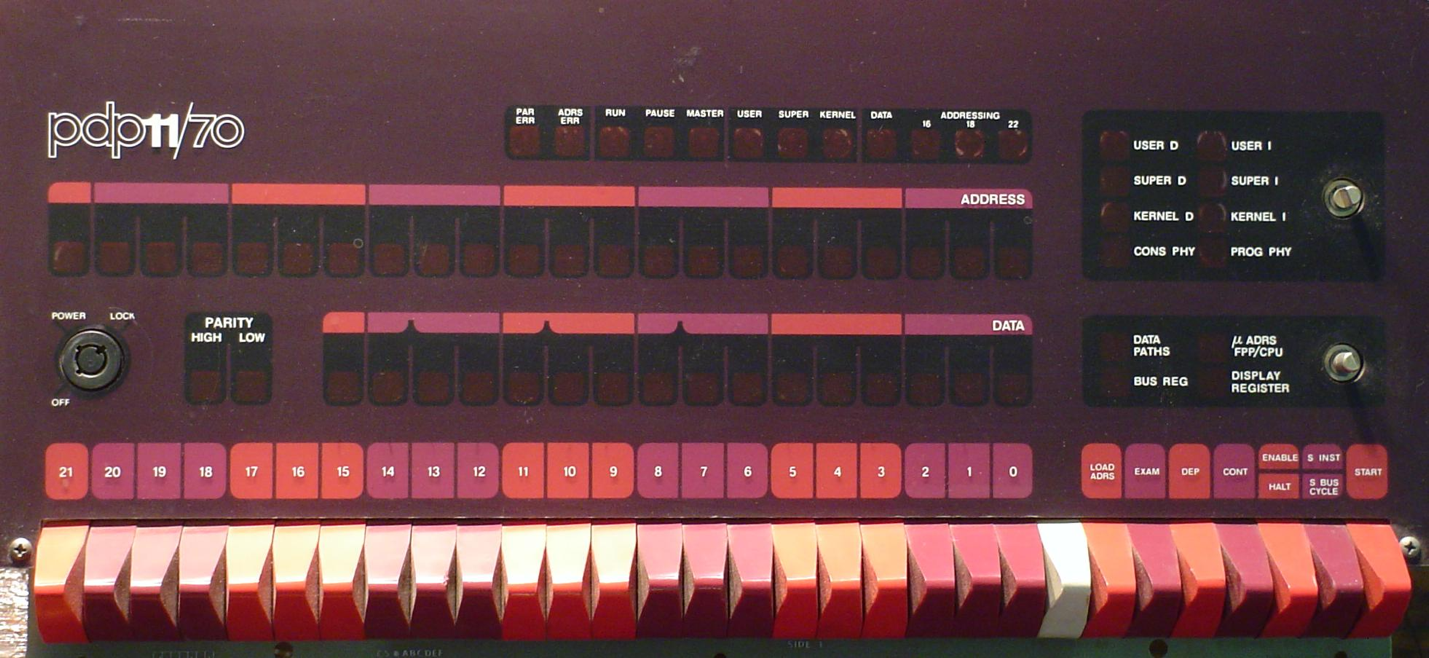

application code. As a teenager I entered the boot sequence (from a note taped to

the console) of a PDP-11 into its toggle switches (see Figure 1) one binary word at

a time, running the program which read the user interface from the card reader

and then using that to load an application and data from magnetic tape or disk.

Coordination of multiple sequential users was through the adoption of software

conventions in the use of shared resources (e.g. disk file system format).

Trust was a key element.

Figure 1: The PDP-11 console. By Retro-Computing Society of Rhode Island

- Own work, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=4656467

Timesharing and multitasking created a persistent

execution environment and automated the loading and isolation of multiple

users. Early virtual machines would implement a separate operating system image for each

user within an isolated execution conclave. The notion of an “operating system process” (and later, threads) evolved

as a more lightweight form of multiprogramming within a single operating system

instance.

Virtual machines and

processes presented an opportunity to enhance the execution model with apparent

characteristics that in some ways exceeded the capabilities of the underlying

physical system, at the expense of other resources. Virtual memory enabled the total main

memory allocated to program execution to exceed that physically installed in

the system by using data movement and storage resources. The tradeoff was

potentially slower program execution. Today, the difference between the native

capabilities of a computing system and the services available to user mode

processes in a modern operating system (e.g. Linux or MS Windows) is

like day and night.

Such augmentation of

the programming model has no effect on the range of computations that are

possible with a given hardware configuration. All computer systems are in fact finite state machines, and so the class of problems

that they can solve at arbitrary scale is limited to the recognition of regular languages.

However sophisticated runtime systems allow the simulation of much more

sophisticated mechanisms, including any computable function, on datasets up to some maximum

size. Beyond that point, the simulation may terminate due to lack of memory resources.

Paged virtual memory greatly extends that

limit on arbitrary computation by using secondary storage and data movement,

allowing it to operate on larger datasets. Note that a solution that is

designed to operate with specific finite resources can sometimes operate on

datasets that are even larger than the maximum enabled by demand paging. Thus paging does not extend the capabilities of the system, but enables much simpler and more intuitive

application development.

What is true of

demand paged virtual memory is also true of many other operating system and

networking mechanisms.

- File systems can be maintained

through simple agreement between users, with minimal support for locking

to manage concurrency. An architecturally protected file system provides

safety and integrity assurances, but does not

expand the range of possible applications that can (in principle) be

implemented.

- Threads (lightweight

multiprogramming) can in many cases be implemented by the user level

runtime system with lower overhead. In these cases

threads are a convenience to developers.

- The guarantees provided by the

Internet’s TCP protocol stack can just as easily be implemented at user level, as

is evidenced by the UDP-based QUIC libraries now

gaining widespread use.

These examples show

that the nature of software system architecture is to extend the scope and size

of problems that can be conveniently solved by application developers. These

solutions are partial, and in many cases approximate - a kind of simulation of

problems that cannot be completely and correctly solved by any finite computer

system. The limiting factor is either compute power or memory. Thus, the reach

of such incomplete solutions can be extended through the creation of huge

multiprocessors with massive main memory and astronomical amounts of secondary

storage. In the past, the main demand for such “supercomputer” installations was for data analysis and simulations in service of scientific research,

at universities and national laboratories.

However today there is a new audience: the simulation of human discourse and creativity by generative artificial

intelligence.

When I was in fifth

grade I used to hang out with my best friend Jules Standen at the massive Harrods department store in

London. In the lobby there was a machine which, when prompted with the name of

any department, would spit out instructions on the slip of paper to navigate

through the multistory building.

We would choose any destination and then delight in traveling through

unfamiliar parts of the store to reach it. We enjoyed relinquishing our

control.

Figure 2: Harrods department store, Kightsbridge,

London. Public domain.

Donald Knuth at one time thought

that a programmer had to work in assembly language to have a true

understanding of the cost of computation. He later embraced the C language,

which is not that different. Unconstrained use of dynamic memory allocation was thought by many

early developers to be wasteful, unpredictable and unnecessary. It was not used

at all by Ken Thompson for internal Unix data structures such as process and open

file descriptors. OS supported fine grained multiprocessing (threads) is still eschewed by the developers of

some technical applications that are highly sensitive to variations in

scheduling or to the cost of context switching.

Over time, the

adoption of tools which ease development, at the cost of efficiency and control

over resource utilization, has been universally accepted. There is a whole

subfield of high level programming which has as a core belief that automated tools can and

should relieve the programmer from the burden of resource allocation and

scheduling. There have been some impressive victories, such as the perfection

of local instruction and data placement scheduling.

But there have also been some areas of disappointment, such as global

instruction and data placement scheduling. Tools which enable quick and

sometimes correct solutions have flourished, and that style of programming now

dominates introductory programming and data structures courses.

Young people often

swear by tools which provide quick answers to problems such as navigating the streets of their own city. Sometimes

are even proud of their own inability to drive unassisted. In 2013 a young

woman responded to a query as to where she was from by saying “I’m from the Internet”. Today, our cognitive

abilities are increasingly merged with problem solving tools, even in the

solution of simple tasks in our daily lives. Harrods has upgraded their store navigation system to a smartphone application using 500 beacons to locate

users on an interactive map.

Today’s generative AI does not actually solve new problems, it is a tool for the

imprecise collection and recombination of existing data and solutions at a

massive scale (stochastic parrot).

By enabling the simulation of discourse and creativity, it enables untrained

practitioners to generate plausible and sometimes correct solutions to problems

that would otherwise have seemed inaccessible to them. In that sense it is part

of a natural progression that started when assembly language and boot programs

were first burned into programmable read only memory.

Sound reasoning is

increasingly the exclusive domain of those who aspire to move human knowledge

and capabilities forward, rather than to exploit the comforts bequeathed to us

by the past. This is not a wrong turn - it is where we have been headed all

along.

Jesus,

take the wheel

Take

it from my hands

'Cause I can't do this on my

own

I'm

letting go/ So give me one more chance

—

"Jesus, Take the Wheel", Some Hearts, Carrie Underwood, 2005